- Published on

RAG is (Not) Dead: How to Think about Building RAG Systems

RAG isn't about vector databases and embeddings, or any specific architecture. It's about retrieving relevant context well.

- Authors

- Name

- Kyle Mistele

- @0xblacklight

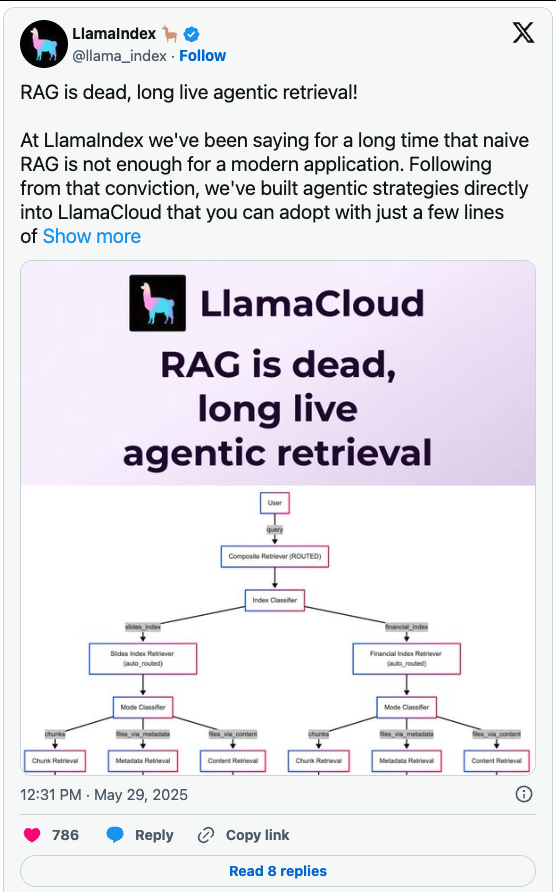

RAG is Dead

"RAG is dead!", the internet says. "Long live <a roundabout description of RAG>!

The problem? Everything that's being framed as a "RAG Killer" is just another form of RAG. What do I mean? It's simple:

RAG is not about semantic search and vector embeddings.

Nor is it about creating the perfect ontology and implementing graph RAG because a talk at that conference you went to convinced you that it would solve all your problems. Nor is it even about picking a system design from that "RAG Architectures" paper you found on ArXiv. RAG is fundamentally about retrieval.

Some have argued that the point is merely semantic, but how you understand this has serious implications for how you design your retrieval system.

In fact, I'm going to argue that if you're building a RAG system, you should not start with vector search. There's a good argument to be made that, depending on what you're building, you shouldn't use it at all.

RAG is About Retrieval

This means that building a RAG system is about building a good retrieval system, not building vector search.

In this context, retrieval refers to the art and science of Information Retrieval (commonly abbreviated as IR), which has existed as a research discipline since at least the 1960's. In other words, retrieval has been around for a lot longer than vector databases and cosine similarity.

Let's refine our taxonomy a bit:

Context engineering involves deliberate control over what information is included, condensed, or excluded from the LLM's context window in order to improve LLM generation quality and reliability.

Retrieval is one way to find additional relevant context to include in your LLM's context window. All retrieval is part of context engineering, but not all of context engineering is about retrieval.

Semantic Search is one form of retrieval among many others. Importantly, while semantic search is one type of retrieval, it is not the only type, and it is (often) not the best type!

You can argue that the point is purely semantic, but I think it's important. Lots of people who are building RAG systems start with vector-based semantic search, not least because vector database companies have an interest in convincing you that their product is synonymous with RAG.

If you're retrieving documents to add to your LLM's context window to improve generation quality, then you're doing RAG. It doesn't really matter how you're doing the retrieval. Any AI system that retrieves information and uses it to augment generation is doing RAG.

This is a particularly pernicious misconception because in many cases, vector embedding-based semantic search is not the best form of retrieval for your app!

CAUTION

If you are building a RAG system, you probably shouldn't start with vector embeddings and semantic search.

On the Inadequacy of Semantic Search

A lot of the confusion around RAG and its (supposed) death arises from the inadequacy of vector database-based semantic search approaches for complex retrieval systems and advanced use-cases.

While OpenAI's text embedding models popularized semantic search and led to an explosion of vector embedding-based search, it has become increasingly clear to people building large-scale retrieval systems for complex tasks that semantic search is very, very far from a silver bullet as far as retrieval is concerned.

Vector databases are really useful for some types of retrieval tasks, but they can be entirely inadequate for others. This has a lot to do with how semantic search systems work:

How semantic search works

To build a semantic search system, you need a vector database, an embedding model, and a document corpus. The Massive Text Embedding Leaderboard (MTEB) is a great starting point for choosing models.

Embedding models represent text as high-dimensional vectors where semantically similar inputs produce similar vectors. The basic process: split documents into chunks within the model's context length, embed all chunks, and store them in your vector database.

For search, embed the query using the same model, then perform cosine similarity search (or other metrics like Euclidean distance, depending on your model and performance needs).

Crucially, the embedding model defines what "similar" means. This is why specialized models exist for different domains like legal or medical applications—"similarity" varies by context. This is one of the main reasons that LLM apps relying only on semantic search fail - because you're using an approach optimized for similarity, when similarity isn't what you want.

But before we dig into why you shouldn't use semantic search, let's very briefly cover cases when you should consider it:

When you should use semantic search

You should consider semantic search as a strong contender for your retrieval system if any of the following are true:

- You are building a recommendation system to find similar documents to a given document

- Similarly but more broadly, given a search term, the measure of search result quality is primarily semantic similarity.

- Your document set is large, unstructured, and sparse/fuzzy.

- Your document set contains varying terminology or jargon where keyword-based search would fail to connect related but differently worded documents.

Note that even in some of these cases, there are still approaches you can attempt using more traditional retrieval mechanisms. Now, in that context, let's look at situations where semantic search tends to fail:

Failure Mode #1: Search terms don't resemble your corpus.

Remember that embedding models are measuring semantic similarity — if your search terms don't semantically resemble the documents that you embedded, you should stay away from semantic search.

A great example of this is when your corpus is composed of contracts, legal documents, standard operating procedures, or spreadsheets, and your search terms tend to look more like natural-language questions or short phrases.

Failure Mode #2: Semantic similarity isn't the measure of result quality

Consider that you're building a codebase search system for a coding agent, and you want to find useful code snippets based on the function you're currently editing to improve the quality of generation.

While it might be useful for your system to find code snippets similar to the document your agent is currently working on, it's probably more useful to have results that include the definitions of functions and symbols in scope, or usages & test cases for the class/function you're currently working on, or extant documentation for the API that it's a part of.

Failure Mode #3: You're working with code

Building on the previous one — unlike natural language, which is semantic, code is definitionally syntactic. While it has semantic properties such as symbol names and string literals, these are secondary. While embedding models that are fine-tuned for code do exist, you will almost always be better served by a different retrieval strategy.

Remember that it's up to the embedding model to define similarity, and it's not always obvious how it does so.

Failure Mode #4: You're working in a niche domain or with proprietary data

Maybe it is the case that search terms resemble your corpus, and also that semantic similarity is the measure of result quality, and also that you're not working with code. It still might be the case that vector embedding-based semantic search isn't the right approach for you.

Certain domains of knowledge may not be sufficiently well-represented in your embedding model's training for it to reliably distinguish between the semantics of related but different concepts in the field. You might find that certain concepts are not properly distinguished between or that everything is "too close together" in the embedding space to get useful search results.

Consider domains like healthcare, medicine, agriculture, life sciences research, or advanced legal or engineering domains. The more niche your vertical or the knowledge domain that you're working with, the more likely it is that this is to be the case.

Other reasons not to use semantic search systems

Here are a few other reasons you should strongly consider reaching for other forms of retrieval before semantic search:

- Semantic search is expensive to use compared to other retrieval methods. It requires computing embeddings (usually using an API) for every chunk of every document. If you change your embedding model, you have to re-embed all your documents. Vector databases are also not cheap and entail lots of storage and very large indexes.

- Semantic search is expensive and difficult to maintain for the same reason. Any time a document changes, it has to be re-chunked and re-embedded. Otherwise, your data will drift from your embeddings. Technically this is the case for BM25 and other lexical search implementations as well that rely on an index, but most popular and/or sane implementations automatically do this for you as part of the index building & ingestion processes. Vectors, on the other hand, have to be calculated externally to the database in most cases.

- Semantic search may be fundamentally incapable of retrieving some documents in your corpus according to a recent paper published by researchers at Google DeepMind and Johns Hopkins University

- Semantic search is opaque and hard to observe. Other forms of retrieval involving lexical search, more traditional clustering techniques,

find/grep-type search tools, and AST tools are all easy to debug. Given a query, you can easily determine why the results of a query were returned and ranked as they were. Since embedding models are very high-dimensional, they are hard to visualize, and the embedding model's training determines the definition of similarity. Aside from the fact that that definition isn't always aligned with what you need, it's also impossible to interrogate. This means that debugging and improving semantic search systems is comparably more difficult.

And more generally, there is usually a retrieval mechanism that is better-suited to what you are trying to accomplish than vector search if you are willing to sit down and define your problem a little better.

On Building Good Retrieval Systems

Now that we've dispelled the myth that semantic search is the "silver bullet" of retrieval and gone through some reasons that you should consider not using it, let's talk about what goes into building a good retrieval system.

If you read a lot of the prominent thought leadership on the topic, you're going to hear "build evals" as the #1 answer. And they're right! Evals are important. But I actually think that evals aren't where you should start.

Step 1: Look at and understand your data

I know, I know. This sounds really dumb. It sounds obvious. And it is — but not enough people do it.

When you set out to build or improve a RAG system, sit down and look at your data and real user queries. If you don't have these, try to come up with some that you'd expect. I'd recommend not generating these for now. From this, you should seek to understand:

- Document structure: What do your data & documents look like? Are they long-form writing, are they technical contracts that are highly self-referential to different sections, are they code, or are they something else?

- User Intent: What information do users (agents?) want to know about them? Are users looking to locate precise information (think needle-in-a-haystack), or are they looking for summarized information and Q&A-type answers, or are they looking for high-level meta-analysis of trends or many different documents, or are they looking for something else entirely?

- Query Structure: How are users looking for that information? Are they interacting with a chatbot? Are they entering search terms? Does a search term look semantically similar to expected search results? Lexically similar? Syntactically similar? Is there a user interface that allows the user to apply filters or constraints on the search? (If not, why? This is a low-hanging fruit!) On average, do user queries actually contain enough information and specificity that makes it possible to locate the answer?

Not understanding the answers to these questions will kill any retrieval system. Like any other program, you have to start by defining your requirements. At a fundamental level, what are the inputs, what are the outputs, and what resources do we have that enable us to achieve the desired outputs given the inputs?

Once you understand the answer to these questions, you should have a good idea of what information you need (and potentially, the gap between what you have and what you need) to answer the user's question.

Step 2: Understanding how to get an answer from your data

You've identified your inputs and your desired outputs. You have a starting point, and you know where you're supposed to end up. But you don't have a map yet. You need to understand how to get an answer from your data.

TIP

A good exercise you can do is to take a bunch of user queries, and manually answer them from your corpus without using AI.

Do you...

- Find yourself writing SQL queries? Maybe identifying filters to apply or conditions to add to a query you already know? Or how about writing multiple iterative queries from scratch?

- Find yourself using "Ctrl-F" or other keyword search features a lot?

- Find yourself hierarchically navigating documents? Is there some sort of logical structure that enables you to zero in on where the likely answer is based purely on structural information? (Hint: this is super common with documentation!)

- Find yourself locating a large number of documents that are related or correlated to perform a meta-analysis?

- Find yourself using your IDE hotkeys to jump across your codebase to symbol definitions and usages and dependencies?

Pay close attention to what this manual retrieval process looks like. Don't just think about what the search part of the process looks like. Beyond that, ask yourself what else you're doing to get your answer. And importantly, did I even have a shot at getting the right answer in the first place, or was I missing important context from the start? Consider:

- Do your documents need preprocessing to enrich, contextualize, or disambiguate information?

- Are there acronyms or terms that need to be defined or expanded to make the information more searchable?

- Are there images that need to be extractioned and captioned or otherwise described by a VLM so they can be retrieved?

- Do your search results need post-processing to make them useful to the LLM? For example, analysis or synthesis by a reasoning model?

- Did your search term need clarification by the user? Does it need to be rewritten entirely?

- Did you even stand a chance at finding the right answer in the first place? If not, take a step back and ask why. Did you need temporal data or filters, location sensitivity, popularity data, or last-accessed/recency data?

- Do your search results contain information that needs further connection & enrichment to be interpreted by an LLM due to lots of terms or material that isn't likely to be represented in your LLM's training data?

This illustrates a really important part of building RAG systems that involve challenging problems: retrieval and generation may not be enough. You might need to design a pipeline that includes preprocessing and postprocessing.

NOTE

In this sense, RAG is a bit of a misnomer. It's actually not just about retrieval and generation. The best RAG systems are often preprocessing or post-processing documents — or both!

Aside: RAG as a subset of Context Engineering

If you fall into the category where you didn't stand a good shot of answering your questions/search terms from the documents you had, or if you were able to but it required a significant amount of extra work, understand that LLMs do not magically solve this problem.

Consider everything else you might have done as a part of the search process we just discussed:

- Were you extracting bits and pieces of information from each document to get to your final answer, or did you need surrounding context, or even the entire document to get your final answer?

- Were you grouping, sorting, labelling, or categorizing your search results before you create your final answer? Were you organizing them in any way?

- Did you need document metadata in addition to document contents to answer the query well?

- e.g. metadata like authors, publication date, provenance, location, popularity/ranking, filepath?

- How were you organizing and presenting the information to get your answer? Did you use any deterministic search/sort/filter processes after you retrieved your documents to home in on relevancy?

- Did you find yourself taking notes on the documents you found so you could refer back to them and understand your documents better?

The answers to the questions above all answer how you need the information structured to best-answer the question. Getting a good answer from an LLM isn't just about getting the right question or finding the right documents. It's about presenting the right information in a well-organized way so that your LLM can make sense of it.

Ask yourself:

If I had to take my search results and structure it in a way so that someone else who didn't perform the search and who only knows the question and my search results could easily identify the answer, how would I do that?

Consider this the "Augmentation" part of RAG: "How can we best present the retrieved information to the LLM in a way to ensure the highest generation quality?"

TIP

It's probably not just dumping all your retrieved answers in the context window and asking for an answer.

This is all part of Context Engineering. Remember what we said earlier?

RAG is a subset of Context Engineering. We are finding relevant context, and we need to ask, "How do we structure the retrieved context as inputs to our LLM in such a way that makes it as easy as possible for the LLM to get the correct answer reliably?"

Just like humans, LLMs will do better if you present the information you found in an ordered, structured way that makes it easy to find the relevant information, that contains any relevant metadata (file names, file paths, etc.), and that makes it easy to understand how everything is related to everything else, if at all.

And in some cases, we need to ask "How do we identify missing context and either locate it or indicate that we aren't able to answer the question without it?"

Step 3: Build a pipeline that accomplishes the process you discovered

Based on the steps you did in the retrieval exercise, your next goal is to take those steps and turn them into a retrieval pipeline.

At this point, you (hopefully) know:

- What your search terms look like (from actual user data)

- What your documents look like

- What search techniques did you use to find your answer

- What types of document preprocessing might make it easier to retrieve the right documents? The more ways you can make documents easier to filter and retrieve, the better! (Here's a great example of a pipeline which uses document / chunk preprocessing to dramatically improve semantic search results)

- What types of document postprocessing might make it easier to get an answer out of your retrieved documents

TIP

If your data is very unstructured, doing some basic document preprocessing to generate tags or labels, or to extract metadata that you can filter by, is frequently worth the effort!

Based on your data and on the query process that you discovered through your manual searches, you may find that the shape of your solution needs to look very different! There are lots of different information retrieval strategies. Your process could resemble...

- A simple recommendation engine that locates documents that are similar (by what metric?) to some search term

- A robust search engine that enables searching for specific terms, phrases or patterns in a refinable way with filters for various fields or metadata?

- An ** AST-based code retrieval tool** that, instead of searching for similar snippets of code, locates relevant snippets of code like symbol definitions, symbol usages, and documentation

- A metadata-driven filter system that uses structured attributes (dates, authors, tags) to narrow down results before doing heavy computation

- A graph traversal system that retrieves connected entities, relationships, and facts rather than raw chunks of text.

- Using

findandgrepon the filesystem

It could also entail...

- Document preprocessing at ingestion time time to...

- enrich the contents (e.g. describing what code does, or using a VLM to caption images, or using a reasoning model to analyze a complex diagram)

- define/extract metadata that can be used for filtering later (e.g. time, authorship, popularity, location, etc)

- Document post-processing at retrieval time between retrieval and inference to analyze, extract, transform, supplement or disambiguate information

- Metadata updates after generation such as updating a document's "last retrieved" date (recency can be useful!), or popularity, or to mark it as relevant/not relevant based on a human or LLM reviewer.

Depending on your other constraints, you might need to build a system that optimizes for single-shot accuracy if you can't tolerate incorrect information. Alternatively, you might need to optimize for something that gets feedback to the user or agent quickly, even if it doesn't contain the answer you need (although hopefully at least it's not wrong either), since you can iterate on it as long as you keep the user informed of what's going on.

In many cases, you will want to implement multiple retrieval strategies so that you can do different types of search concurrently - and then you can merge the results using reciprocal rank fusion. If you're finding that that doesn't work for you and you're really struggling to narrow down your search results, you can also try adding a reranker modelto your pipeline. (Worth noting: A re-ranker won't make a bad pipeline into a good one. You still need good search results in the first place! It can just make a good pipeline better.)

Aside: "Agentic Retrieval"

A lot has been said recently about "agentic retrieval" or how it's the "RAG Killer". I like to emphasize that agentic retrieval is not a fundamentally different architecture or strategy from RAG! Really, all that it entails is giving an agent access to one or more retrieval systems as tools, and allowing it to specify the inputs: search terms, filters, logical operators, SQL queries, whatever — and then letting it iteratively search until it finds what it's looking for.

Examples of these include iterative keyword search with agent-specified keywords (or you can even upgrade it by supporting simple logical expressions to, like AND, NOT, OR, XOR, etc.), or iterative find/grep commands, iterative text-to-SQL queries (ideally in a read-only capacity), or even coming up with multiple different inputs into a semantic search engine that are designed to semantically resemble the type of documents you're expecting to retrieve.

Step 4: Evaluate & Iterate

Once you've built your retrieval pipeline, the next thing you need to do is build evals for it, and then iteratively improve it based on those evals.

A lot has been written about how to build evals for RAG pipelines, and this post isn't about evals, so I've linked to some great resources below. Note that while some of them are primarily concerned with semantic search, the techniques and metrics still apply.

Improving your RAG system

Jason Liu has written a lot of great posts on systematically improving RAG systems, which cover the topic in much more depth and with much more expertise than I could.

- Low-Hanging Fruid for RAG Search

- FAQ on Improving RAG Applications

- Systematically Improving your RAG

- Levels of Complexity: RAG Applications

RAG Evals

- An Overview on RAG Evaluation by Erika Shorten and Connor Shorten at Weviate

- RAG Evaluation using synthetic data by Aymeric Roucher at HuggingFace

- Stop using LGTM@Few as a metric (Better RAG) by Jason Liu

- RAG Evaluation: Don't let customers tell you first by Pinecone

- Evaluate a RAG application by LangChain

- Best Practices in RAG Evaluation: A Comprehensive Guide by David Myriel at Qdrant

Thoughts on various retrieval techniques

For your consideration, I've shared some thoughts on a variety of retrieval techniques below: what some of their strengths and limitations are, and when you should maybe think about using them. This is intended to be helpful, but not exhaustive.

Lexical search

Lexical search, or keyword search, is a traditional method of information retrieval that's focused on finding matches for specific words or phrases. Think:

Find all documents that contain any (or all) of the following keywords

Though it seems simple, lexical search is a very powerful tool in your retrieval arsenal.

BM25 is probably the most popular lexical search implementation at present. It's easily available off-the-shelf in open-source tools like Elastic, as well as through managed offerings in most clouds.

There are other forms of lexical search, such as Postgres's full-text search with tsvector and tsquery, although they are broadly regarded as being inferior to BM25. For postgres fans such as myself, there's a fast BM25 extension for Postgres you can use to get the best of both worlds.

Some may find this surprising, but BM25 consistently outperforms semantic search on a wide variety of benchmarks for many use-cases.

TIP

If you're starting to build a RAG system, BM25 is a great starting point.

If you're already using semantic search, add BM25 for a hybrid architecture and watch your retrieval quality dramatically improve. BM25 is about as easy to deploy as it gets in terms of information retrieval techniques (just pull an elastic docker container to try it out), so there's really no reason not to do it.

Of course, BM25 is not a silver bullet, nor is it (alone) likely to solve all your retrieval woes — but it's a sane starting point. Perhaps the most sane starting point.

Semantic search with metadata-based filtering

While I don't think that semantic search is the best tool for your retrieval system, and while I don't think it's the best starting place for lots of tasks (though it may be for some), I do think that it is a valuable component of many retrieval systems.

The contents of this post may have left you under the impression that I am against semantic search. This is not the case, although I do think that (a) We need to stop equating semantic search with RAG, (b) It doesn't make sense for lots of retrieval systems where it is used, and (c) If your RAG system only uses semantic search, you are probably building a bad RAG system.

Semantic search can be quite valuable, and excels at capturing loosely-defined, hard-to-syntactically-define notions of similarity with unstructured data.

You can make semantic search much more useful by combining it with metadata-based filters. You can tag, label, and add other metadata to your documents at ingestion time as part of preprocessing if they don't have them already, and then either allow users to define filters through a user interface, or allow an agent to infer (or iteratively explore) and specify these filters at retrieval time.

Doing this combined with BM25, is actually a pretty sane default for RAG systems. I linked to it above, but in case you missed it: Anthropic wrote a great blog post about a technique they call Contextual Retrieval, which entails a pipeline of chunking, enrichment, and then hybrid retrieval with semantic search and BM25.

If you haven't read it, I highly recommend reading it.

Graph RAG

Graph RAG is really hot right now, and if you go to a conference, you might get the impression that it's a silver bullet for complex retrieval challenges. It's not, but Graph RAG is really good at a couple of things:

- Problems that involve complex relationships between information. For example, if there's a lot of information "downstream" of a given query that the model doesn't know and which lexical or semantic search won't automatically find, graph RAG can be a good approach. Healthcare and in particular, medicine are considered canonical examples of domains where this is the case. Modelling relationships between medical conditions, diseases, various drugs and pharmaceuticals, and their effects and interactions is not trivial, and it is also something that is not well-represented in LLMs' training data in great depth. Graph RAG can be a good way to retrieve relevant information about these topics, where lexical search or semantic search falls short.

- Problems that involve locality, distance, or connectedness. Graphs can be good ways to model networks, interpersonal relationships, or even physical connectedness (roads, anybody?). If you're trying to model and query a social network, graph RAG and lexical search might be a great starting point. Graph RAG could also be a great way to handle modelling financial relationships, for example anti-money-laundering or tracing blockchain transactions.

- Multi-hop reasoning & discovery of non-obvious relationships. Graph RAG is good at surfacing things that are not semantically or lexically related to the search term, but which may be highly relevant or even necessary to get the correct answer to a search.

PageRank, anybody?

Practical Considerations

- Overkill for simple factual queries. I mentioned this before, but lots of people who are using Graph RAG probably don't need it. A reasonably sophisticated search engine that combines semantic and lexical search with metadata- and label-based filtering is sufficient for lots of use-cases.

- Graph RAG can be expensive to build and maintain. You need to extract entities and relationships from ingested documents, which usually requires using an LLM or NER (Named Entity Recognition) model. Depending on the domain, you may find yourself fine-tuning an NER model for your use-case.

- Often still requires lexical or semantic search. Graph traversal is great for helping you uncover relationships, but you still have to start somewhere, which means you need to determine where to start! This is often done with semantic or lexical search, though it could also be done with iterative agent-generated queries.

Text to SQL

It goes without saying, although I'll say it anyway, that this pertains to more than just SQL. You can have your agent generate MongoDB aggregations or Neo4j queries just as easily as SQL.

This is a really interesting approach (and a dangerous one, if you don't limit your agent's permissions to read-only) which takes full advantage of the fact that most frontier LLMs are trained very heavily on code, and that SQL in particular is heavily-represented in that training data. Instead of writing SQL queries and then letting LLMs fill in search terms or keywords or time frames, you allow the LLM to write the entire query (or even a multi-query transaction or aggregation) to be executed against the database.

This is a more "agentic" type of retrieval and is usually done in an iterative manner to allow the agent to refine its search. (Make sure to give your LLM a copy of your database schema in its' prompt if you're using this approach)

It particularly shines when you need to do data science and data analysis tasks, but can be useful any time you need to crunch or find lots of data in a database, and there are lots of different user needs for searching, sorting, filtering, and aggregating that might be hard to anticipate ahead of time. A great example of this is when you need an LLM to analyze lots of tabular data, which LLMs are normally awful with.

For even better results or a better user experience, let the agent write Python matplotlib code in a sandbox for visualization and show the graph to the user. Or, programatically render the graph to an image and feed it back into the LLM if it has vision capabilities. This can be much better than feeding the LLM raw data points for some types of analyses!

Just be careful - discourage the LLM from running queries that are likely to return large amounts of data to the LLM, for example, hundreds or thousands of rows. While LLMs are great with SQL, they are bad at looking at raw tabular data, and your context window will fill up quickly! Consider truncating the number of rows that are returned to the LLM (and indicate to it that you're doing so) to avoid this problem.

grep, find and other filesystem-based RAG approaches

A lot of the "RAG is dead" pronouncements come from new coding agent startups making the (unsurprising) discovery that coding agents are quite handy with find and grep, and that iterative calls to these utilities tend to be better at locating desired context than semantic search when the context is a codebase.

This should be entirely unsurprising given my earlier section on semantic search:

- most embedding models do not represent code well, since code is primarily syntactic and the semantics of it (especially for embedding models that are not fine-tuned for code, which is most embedding models) are inferred from symbol names

- embedding models that represent code well still have a loose definition of relevance. If you have a symbol that's used in 100 places, what defines relevance to the source? It's not clear to the model, and why the model returns the

top-kinstances that it does may not be clear to you, and therefore hard to debug - semantic search doesn't give you precision - it will be hard to locate particular usages, particular types of usages, or symbol definitions given a usage, or symbol usages given a definition, since there is usually not a great way to indicate this intent to the embedding model in a way that improves your results.

Iterative find and grep calls (or ripgrep if you're cool) are really just a form of iterative lexical search where instead of using an inverted index, your index is the filesystem. It's retrieval, and therefore still RAG.

This approach is great for user-directed coding agents, but is not-so-great for other types of agents where your agent doesn't have access to a filesystem that's isolated on a per-session basis.

Clustering

I'm just going to touch on this - traditional NLP clustering techniques, while less sophisticated than semantic embedding models, are still useful in lots of RAG systems! Some may find this surprising, but you can do lots of interesting things:

- Cluster your documents, and ingest different types of documents into different retrieval systems depending on the type of document. In fairness, LLMs can do zero-shot semantically-explainable clustering (and maybe you want to do that!) but for massive systems the cost savings of using a smaller model can be beneficial. So too with the latency of a smaller model for a latency-critical system.

- Cluster your queries, and do query routing: depending on the type of query, use a different retrieval system or a different index, or a different filter. There are lots of possibilities!

- Cluster your queries for the purposes of analyzing and improving your system's performance. Are there clusters that are much easier to answer for which you can use a cheaper/faster retrieval mechanism? Are there clusters that are much harder to answer, where you need to focus your efforts on evals and iteration, or where you might consider introducing a type of retrieval system and performing query routing or hybrid search?

Conclusion

RAG isn't dead, and claims that it is miss the forest for the trees. As I've explored, RAG isn't about a specific technology or a specific architecture; it is fundamentally about retrieving relevant context well to augment an LLM's generation.

The proliferation of "RAG killers" isn't evidence of RAG's failure; it's just more evidence that (a) retrieval is hard and (b) vector embeddings haven't "solved retrieval once and for all". The path forward isn't about finding the perfect vector database or fine-tuning a new embedding model. It's about understanding your data, your users' needs, and building pipelines that actually work for your specific use-case.

More than that, it is best-understood as a subset of Context Engineering: a deliberate and thoughtful process of curating and presenting information to your LLM to reliably produce quality outputs. This has significant implications for how you design your pipeline, especially the ingestion-time preprocessing and post-retrieval processing parts of your pipeline.

Try to approach all of this with an engineering mindset: seek to deeply understand your retrieval problem, do your research, and don't forget that the field of IR wasn't invented yesterday, design a solution around your problem space, build it, evaluate the results, and iterate. Start simple. Before you reach for semantic search, try BM25. Before you implement Graph RAG, make sure you need graph traversal.

And before you build anything, sit down with real user queries and manually walk through how you'd find the answers. If your data is no good, you don't have a retrieval problem — you have a data engineering problem. Set aside the generation part to start. If you can't define what good retrieval looks like apart from generation, you have a requirements problem.

Remember: vector-based semantic search RAG Context engineering. Your job isn't to build a vector database - it's to build a system that reliably gets the right information to your LLM in a way that it can use effectively.